The move to the cloud for many companies started with the need for more computing power, flexibility, and scalability. The trend has been greatly accelerated by technological advances and today’s work-from-home environment. Despite investments in the millions and the bells and whistles added with cloud solutions, it comes as a surprise at the end that the core issue of computational efficiency remains unsolved to a large degree. The problem is two-fold: performance often hits a ceiling after which performance increase is minimal when more resources are added, and the I/O inefficiencies may further offset computation performance gains. We focus on the first issue in this article.

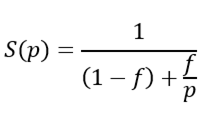

The phenomenon of a performance ceiling can be explained by Amdahl’s law. Originally from computer engineering, Amdahl’s law gives the theoretical speed-up when multiple processors are used. It is formulated as follows:

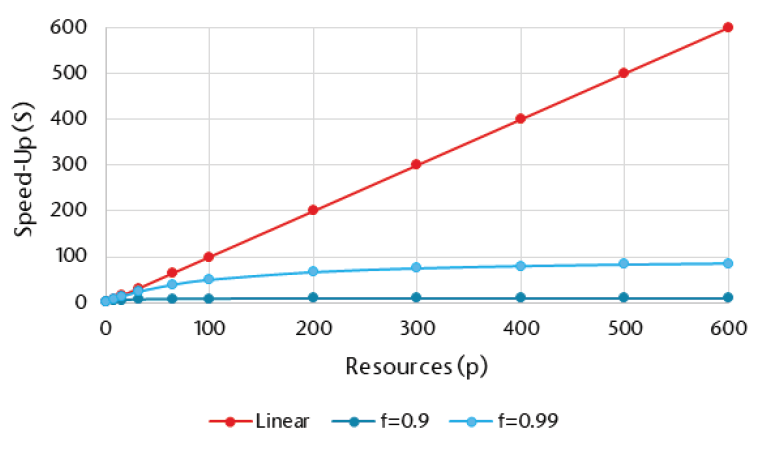

where S is the theoretical speed-up, p is the number of processing threads/resources across which the parallel task is split, and f is the fraction of the program that can be executed in parallel. Clearly, when f is 1 i.e. the program execution is completely parallel, we have S=p which means the speed-up is exactly linear with respect to the number of threads. This is the ideal situation where the system benefits fully from the increase of resources. What if f is less than 1? We compared the results for f values of 1, 0.99 and 0.9:

Even for small reduction of 0.01 (1%) in the parallel portion of the run, we see the theoretical speed-up quickly flattens when we increase the resources and almost no improvement is found after 500. For the 0.9 case, the speed-up tops out at around 10 Regardless how many processors you throw at the problem. It’s not uncommon to see values between 0.9 and 0.99 in real life for legacy systems which were built when words like parallelism and scalability were not yet in the vocabulary.

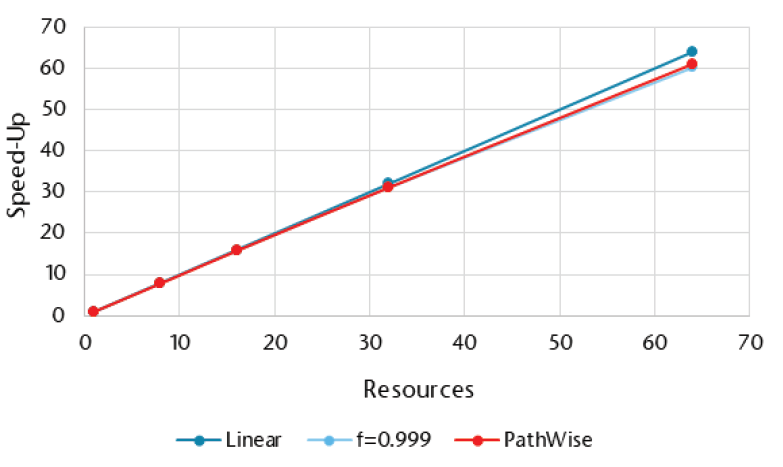

How does PathWise compare using Amdahl’s law? We performed a test using a complex real-life model which is optimized to run in parallel on GPUs (Graphics Processing Units). Here the resources on the x-axis are the number of GPUs and each GPU has nearly 3000 processing cores. So, for 64 GPUs, you have about 180,000 cores. Although not directly comparable, it is nearly impossible to obtain a similar number of CPU cores. The following graph shows the speed-up results:

We can see PathWise’s actual results closely trace the 0.999 line which implies the program is 99.9% parallel in execution. The efficiency is so high that it almost achieves the theoretical ideal linear scalability of 1. This is by no means easy to achieve. PathWise was built from the ground up for high-performance parallel computing and it took years of optimization based on industry-specific requirements and many proprietary technologies in the computation engine and in the middleware to make sure it runs just as fast in real-life settings as in lab settings.

The main takeaway of this experiment is that cloud should not be taken as panacea for your computing woes. While it helps to some extent, the return on investment quickly diminishes if the system is not designed for parallel computing or in other words, does not scale. Without visibility into the computer algorithms buried deep in the system, true high-performance computing remains elusive.

Download the article