Where Cyber Meets Physical: Rethinking Risk in the AI Age

From drones that dodge surveillance to deepfakes that unlock doors, AI is reshaping physical security. It’s time for risk managers to rethink how they protect their organizations.

Key Takeaways

-

AI is blurring the lines between cyber and physical threats — risk managers must reconsider how they secure buildings, systems and people.

-

Low-cost, easily accessible and high-impact tools like drones and 3D-printed weapons are empowering threat actors to carry out physical security breaches with greater ease and precision.

-

Traditional defenses aren’t enough — organizations must update and adapt their physical security strategies and model AI-enabled threat scenarios.

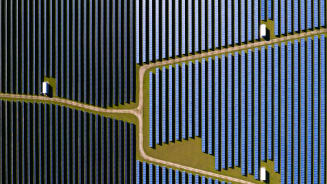

AI is changing the nature of physical threats. By analyzing publicly available data, such as satellite imagery, floor plans, social media activity and employee movement patterns, attackers can simulate patrol routes, identify surveillance blind spots and map access points to bypass physical security measures. These tactics are fueling a rise in non-traditional incidents like drone-enabled attacks and unauthorized perimeter entry.

This shift is challenging long-held assumptions about physical security. All businesses need to reassess how they manage emerging threats — but for high-value sectors, the urgency is even greater. AI-enabled disruptions are increasingly exploiting overlooked vulnerabilities and crossing traditional boundaries.

The convergence of cyber and physical risk has been acknowledged for years, but artificial intelligence is now accelerating that shift in ways that demand urgent attention. What was once a theoretical overlap has become a real and rapidly evolving threat landscape. AI is enabling new forms of physical disruption: from the misuse of autonomous drones and deepfake-enabled access breaches to the sabotage of building management systems. These are no longer future risks. They are happening now, across industries and geographies.

As Brent Rieth, Global Head of Cyber Solutions, notes, “In the U.S., we’re seeing how AI is lowering the barrier for cyber-physical attacks — turning mid-level hackers into high-impact disruptors. Legacy infrastructure and industrial systems are increasingly vulnerable, and the speed and precision of AI-powered intrusions demand a new level of preparedness.”

6 Ways AI Is Reshaping Physical Security

-

01

AI-Augmented Social Outreach

AI is enhancing the tools available for social influence and immersive outreach. Organizations face reputational and operational risks from AI-driven campaigns. Monitoring strategies are essential.

-

02

Democratization of Dual-Use Technologies

Organizations must track emerging threats and ensure policies address dual-use technology risks.

-

03

Social Engineering and Identity Spoofing

AI-generated deepfakes and impersonation tactics are increasingly enabling sophisticated security breaches, including tailgating and insider threats. Organizations must strengthen identity verification protocols and train staff to recognize deception.

-

04

AI-Enhanced Surveillance and Physical Intrusion

AI can analyze CCTV surveillance data to plan intrusions with precision. Deepfakes and cloned credentials bypass traditional access controls, such as facial recognition and RFID. Organizations should review physical security systems and integrate AI-aware countermeasures.

-

05

Autonomous Drone Misuse

Organizations must assess vulnerabilities at strategic chokepoints and prepare for low-cost, high-impact disruptions.

-

06

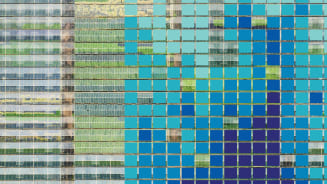

AI-Driven Cyber-Physical Attacks on IoT

Generative AI lowers the barrier for attacks on industrial control systems. Manipulated physical parameters can cause fires or equipment failure. Organizations must prioritize IoT security audits and update legacy systems to prevent exploitation.

AI Is Driving a New Era of Business Vulnerability

AI is creating new fault lines in physical security — and many organizations are not prepared. Threat actors now use machine learning to gather intelligence, simulate breaches and execute highly targeted intrusions with minimal human input. These events are fast, precise and increasingly difficult to detect.

Maliciously deployed large language models (LLMs) are lowering the barrier to entry for carrying out advanced malicious attacks. Adversaries can train custom models to target specific buildings, guide payload deployment and trigger actions based on environmental conditions. Static surveillance and locked perimeters are no longer enough.

As cyber budgets continue to grow while physical security investments lag, a critical imbalance is emerging — creating exploitable gaps. Building management systems (BMS), which oversee control access, HVAC, lighting and surveillance, are becoming prime targets. AI-enabled attackers are quick to spot these weak points and use them to bypass traditional defenses.

The UK’s National Cyber Security Centre (NCSC) has highlighted a sharp escalation in cyber-physical threats. In 2024, the NCSC reported a threefold increase in the most severe cyber incidents, many of which caused widespread operational and physical disruption across multiple sites.1

As this is a new and rising threat, specific data on AI-enabled attacks causing direct physical damage is limited. However, government publications — including those from the UK — highlight the urgent need for “proactive security strategies, interdisciplinary collaboration, and the development of robust frameworks to mitigate evolving cyber threats”.2

AI is accelerating economic growth and transformation, but it’s also exposing new layers of unprotected vulnerability — especially in asset portfolios where traditional insurance models haven’t kept pace. Bridging this gap requires rethinking how we quantify and insure emerging physical risks.

-

Threat Scenario

- Threat actor gains access to a facility’s control room and installs an unauthorized AI system

- Once embedded, the AI can:

- Learn operational patterns

- Identify weak points

- Disable alarms and unlock doors

- Exfiltrate sensitive data

- Trigger destructive actions (e.g. delete backups)

- Cripple recovery capabilities

-

Risk Amplifiers

- Reduced CapEx on physical security: Many organizations have deprioritized upgrades to access control systems, leaving legacy infrastructure vulnerable.

- Lack of AI governance: Inadequate AI monitoring protocols can enable the creation and use of AI models that may behave unpredictably or be exploited for malicious purposes.

- Hybrid threat vectors: These attacks blur the line between cyber and physical, complicating detection and response.

-

Implications for Risk Managers

- Reassess PV Programs: Update Probable Maximum Loss (PML) modeling to reflect AI-enabled physical threats.

- Unify Security Strategy: Treat cyber and physical security as a single domain with shared intelligence and protocols.

- Implement AI Governance: Monitor AI behavior in operational systems with anomaly detection and kill-switch capabilities.

-

Recommendations

- Conduct a physical security audit focused on AI vulnerabilities in building systems.

- Model AI-enabled threat scenarios in PML studies, including malicious autonomous systems and drone swarm attacks.

- Collaborate across departments (e.g. IT, facilities and risk) to ensure cohesive defense strategies.

- Engage with insurers to develop tailored coverage solutions for AI-driven physical damage and business interruption (PD/BI) and cyber-physical damage (CYPD).

- Implement layered access controls and encryption for AI models and training data stored in physical systems.

- Establish governance protocols for lifecycle management of AI assets.

AI-enabled threats and their risk implications

| Risk Type | How It Works | Primary Risks | Key Takeaway |

|---|---|---|---|

| AI-enabled intelligence gathering | AI analyzes publicly available data (satellite imagery, social media, floor plans) to map vulnerabilities and assist with planning physical intrusions. | Exposure of sensitive site details; attackers can identify security gaps and plan targeted breaches. | Monitor and minimize publicly accessible data; update security protocols regularly. |

| AI-orchestrated access breaches | AI uses surveillance data, behavioral patterns and spoofed credentials (e.g. deepfakes, cloned badges) to bypass physical access controls and execute intrusions. | Tailgating, insider threats and unauthorized access to restricted areas. | Strengthen identity verification, monitor access logs and train staff to detect deception. |

| AI-facilitated cyber-physical attacks | AI exploits weak physical access points and building systems to breach cyber defenses, resulting in real-world disruptions via digital entry. | Pathways from physical breach to cyber compromise; potential for operational disruption, data theft and cascading failures. | Integrate cyber and physical security planning to close gaps and prevent cross-domain attacks. |

“Organizations should proactively revisit their crisis management and business continuity plans, and review their insurance programs, to ensure coverage limits are appropriate for the emerging risks imposed by AI-enabled attacks,” says Mani Dhesi, Head of Growth and Client Management, Cyber Solutions in Asia Pacific. “As threat landscapes evolve, greater awareness and understanding of physical security vulnerabilities are essential, even as cyber security remains the dominant focus.”

What Can Risk Managers Do to Reassess AI-Driven Physical Threat

As artificial intelligence becomes increasingly embedded in enterprise operations, organizations must navigate a fragmented and rapidly evolving regulatory landscape.

Existing privacy and cybersecurity laws — including the General Data Protection Regulation3 in Europe, the California Consumer Privacy Act4 in the U.S. and sector-specific standards such as Health Insurance Portability and Accountability Act5 and Payment Card Industry Data Security Standard6 already impose stringent requirements on data governance and infrastructure. These extend to systems supporting AI, mandating physical and environmental safeguards such as secure facilities, controlled access, and continuous monitoring across data centers, building management systems, and operational technology.

In parallel, jurisdictions are introducing AI-specific governance frameworks — notably the European Union AI Act7 and Singapore’s AI governance initiatives8 — designed to promote responsible innovation. These efforts reflect growing recognition of AI’s transformative potential and associated risks. However, they remain nascent and often lag behind the pace of technological advancement.

This regulatory asymmetry has created a critical blind spot: AI-enabled threats to physical security. From autonomous systems to smart infrastructure, AI introduces new vectors of risk that are not comprehensively addressed by either traditional cybersecurity laws or emerging AI governance. These gaps expose organizations to operational vulnerabilities that are often underestimated or overlooked.

“For risk managers, the imperative is clear. They must not only ensure compliance with established regulatory regimes that partially address AI infrastructure but also anticipate and mitigate emerging threats that fall outside current frameworks,” Larabella Myers, Engagement Manager, Cyber Solutions in Asia Pacific, notes.

“This demands a forward-looking, cross-functional approach to governance — one that integrates legal, technical, and operational perspectives to build resilience in an increasingly intelligent and interconnected threat landscape.”

Integrated Strategies to Mitigate AI-Driven Physical Risks

| Category | Strategy | Why It Matters |

|---|---|---|

| 1. Threat Landscape Analysis | Review Threat Exposure | Reassess operational footprint across physical, digital and geopolitical domains. Analyze exposure to AI-enabled political violence and dual-use technologies (e.g., drones, generative AI, IoT). Integrate horizon scanning and threat intelligence into enterprise risk views. |

| Model Threat Scenarios | Develop structured scenarios involving AI tactics (e.g., autonomous drones, deepfakes, cyber-physical sabotage). Use blast-impact and Probable Maximum Loss (PML) models to simulate and quantify exposure. |

|

| 2. Security Controls & Access Management | Revisit Controls | Evaluate effectiveness of physical and cyber controls against AI-enhanced threats. Identify gaps in surveillance, access control and incident response. Integrate behavioral anomaly detection and AI-enhanced monitoring. Embed AI into tabletop exercises and response playbooks. |

| Access Control & Segmentation | Apply multifactor authentication, biometrics and physical segmentation to limit unauthorized movement. | |

| AI-Powered Defense Systems | Deploy AI-based surveillance and intrusion detection to autonomously identify and neutralize threats. | |

| 3. Preparedness & Simulation | Threat Scenario Modeling & Simulation | Simulate AI-driven attacks (e.g., drone swarms, guided intrusions) to assess impact and prioritize defenses. |

| Red Team Exercises | Engage external experts to simulate AI-based attacks and uncover hidden vulnerabilities. | |

| 4. Collaboration & Awareness | Cross-Disciplinary Risk Teams | Break down silos by collaborating across IT, physical security, legal and insurance teams. |

| Employee Training & Awareness | Train staff to detect AI reconnaissance, phishing and impersonation attempts. |

In the age of AI-enabled adversaries, resilience starts with modeling the risks at the intersection of digital and physical threats and implementing data-driven strategies across analytics, collaboration and insurance.

Take Action: Bridge the Cyber-Physical Divide

Is your security strategy keeping pace with AI-driven hybrid threats? Connect with our experts to align digital and physical risk models for stronger resilience.

1 NCSC Annual Review 2024 - NCSC.GOV.UK

2 Emerging technologies and their effect on cyber security - GOV.UK

3 Complete guide to GDPR compliance, GDPR.eu

4 California Consumer Privacy Act (CCPA), State of California Department of Justice – Office of the Attorney General

5 Health Insurance Portability and Accountability Act of 1996 (HIPAA), U.S. Centers for Disease Control and Prevention

6 PCI Security Standards Overview, PCI Security Standards Council, LLC

7 AI Act, European Commission

8 Evolving AI Laws in Asia: Regulations and Key Challenges, Korum Consulting Limited

General Disclaimer

This document is not intended to address any specific situation or to provide legal, regulatory, financial, or other advice. While care has been taken in the production of this document, Aon does not warrant, represent or guarantee the accuracy, adequacy, completeness or fitness for any purpose of the document or any part of it and can accept no liability for any loss incurred in any way by any person who may rely on it. Any recipient shall be responsible for the use to which it puts this document. This document has been compiled using information available to us up to its date of publication and is subject to any qualifications made in the document.

Terms of Use

The contents herein may not be reproduced, reused, reprinted or redistributed without the expressed written consent of Aon, unless otherwise authorized by Aon. To use information contained herein, please write to our team.

Aon's Better Being Podcast

Our Better Being podcast series, hosted by Aon Chief Wellbeing Officer Rachel Fellowes, explores wellbeing strategies and resilience. This season we cover human sustainability, kindness in the workplace, how to measure wellbeing, managing grief and more.

Aon Insights Series Asia

Expert Views on Today's Risk Capital and Human Capital Issues

Aon Insights Series Pacific

Expert Views on Today's Risk Capital and Human Capital Issues

Aon Insights Series UK

Expert Views on Today's Risk Capital and Human Capital Issues

Client Trends 2025

Better Decisions Across Interconnected Risk and People Issues.

Construction and Infrastructure

The construction industry is under pressure from interconnected risks and notable macroeconomic developments. Learn how your organization can benefit from construction insurance and risk management.

Cyber Resilience

Our Cyber Resilience collection gives you access to Aon’s latest insights on the evolving landscape of cyber threats and risk mitigation measures. Reach out to our experts to discuss how to make the right decisions to strengthen your organization’s cyber resilience.

Employee Wellbeing

Our Employee Wellbeing collection gives you access to the latest insights from Aon's human capital team. You can also reach out to the team at any time for assistance with your employee wellbeing needs.

Environmental, Social and Governance Insights

Explore Aon's latest environmental social and governance (ESG) insights.

Q4 2023 Global Insurance Market Insights

Our Global Insurance Market Insights highlight insurance market trends across pricing, capacity, underwriting, limits, deductibles and coverages.

Global Risk Management Survey

Better Decisions Across Interconnected Risk and People Issues.

Regional Results

How do the top risks on business leaders’ minds differ by region and how can these risks be mitigated? Explore the regional results to learn more.

Top 10 Global Risks

Trade, technology, weather and workforce stability are the central forces in today’s risk landscape.

Industry Insights

These industry-specific articles explore the top risks, their underlying drivers and the actions leaders are taking to build resilience.

Human Capital Analytics

Our Human Capital Analytics collection gives you access to the latest insights from Aon's human capital team. Contact us to learn how Aon’s analytics capabilities helps organizations make better workforce decisions.

Human Capital Quarterly Insights Briefs

Read our collection of human capital articles that explore in depth hot topics for HR and risk professionals, including using data and analytics to measure total rewards programs, how HR and finance can better partner and the impact AI will have on the workforce.

Insights for HR

Explore our hand-picked insights for human resources professionals.

Workforce

Our Workforce Collection provides access to the latest insights from Aon’s Human Capital team on topics ranging from health and benefits, retirement and talent practices. You can reach out to our team at any time to learn how we can help address emerging workforce challenges.

Mergers and Acquisitions

Our Mergers and Acquisitions (M&A) collection gives you access to the latest insights from Aon's thought leaders to help dealmakers make better decisions. Explore our latest insights and reach out to the team at any time for assistance with transaction challenges and opportunities.

Natural Resources and Energy Transition

The challenges in adopting renewable energy are changing with technological advancements, increasing market competition and numerous financial support mechanisms. Learn how your organization can benefit from our renewables solutions.

Navigating Volatility

How do businesses navigate their way through new forms of volatility and make decisions that protect and grow their organizations?

Parametric Insurance

Our Parametric Insurance Collection provides ways your organization can benefit from this simple, straightforward and fast-paying risk transfer solution. Reach out to learn how we can help you make better decisions to manage your catastrophe exposures and near-term volatility.

Pay Transparency and Equity

Our Pay Transparency and Equity collection gives you access to the latest insights from Aon's human capital team on topics ranging from pay equity to diversity, equity and inclusion. Contact us to learn how we can help your organization address these issues.

Property Risk Management

Forecasters are predicting an extremely active 2024 Atlantic hurricane season. Take measures to build resilience to mitigate risk for hurricane-prone properties.

Technology

Our Technology Collection provides access to the latest insights from Aon's thought leaders on navigating the evolving risks and opportunities of technology. Reach out to the team to learn how we can help you use technology to make better decisions for the future.

Trade

Our Trade Collection gives you access to the latest insights from Aon's thought leaders on navigating the evolving risks and opportunities for international business. Reach out to our team to understand how to make better decisions around macro trends and why they matter to businesses.

Transaction Solutions Global Claims Study

Better Decisions Across Interconnected Risk and People Issues.

Weather

With a changing climate, organizations in all sectors will need to protect their people and physical assets, reduce their carbon footprint, and invest in new solutions to thrive. Our Weather Collection provides you with critical insights to be prepared.

Workforce Resilience

Our Workforce Resilience collection gives you access to the latest insights from Aon's Human Capital team. You can reach out to the team at any time for questions about how we can assess gaps and help build a more resilience workforce.

More Like This

-

Article 6 mins

Strategies for Closing the Gender Retirement Pay Gap

Addressing the retirement pay gap issue between men and women starts with first acknowledging it exists. Then companies can conduct further analysis and adjust their benefit plans accordingly.

-

Article 8 mins

How AI, Cost Pressures and Reskilling are Transforming Talent Strategies

AI acceleration, rising healthcare costs and changes to workforce skills are transforming organizations. Our analysis of financial services, life sciences and technology companies provides insights on how to redesign roles, reskill at scale and reimagine talent strategies to stay competitive.

-

Article 10 mins

Industrials and Manufacturing: A Risk Management Approach to Transform Workforce Risk into Workforce Resilience

Workforce-related risks — spanning health, benefits, safety systems, and data and analytics — are not just operational concerns but strategic drivers. When activated, they positively shape the total cost of risk and long-term resilience for industrials and manufacturing organizations.